这几天学习了Python爬虫有关的知识,自己做了一个简单的实例:爬取熊猫直播某板块的主播信息。本实例使用Requests +BeautifulSoup和爬虫框架Scrapy两种方法。

BeautifulSoup可以从HTML或XML文件中提取数据,Requests则用于读取网络资源。虽然Python内置的urllib模块也可以读取网页,但Requests使用起来要更方便。首先需要确定要爬取的URL,这里我选择了熊猫直播的“守望先锋”板块,网址为https://www.panda.tv/cate/overwatch。先来看一下网页的源码:

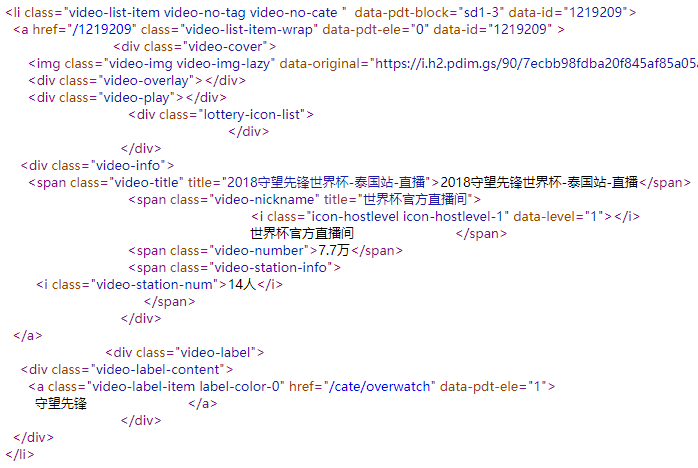

<li>标签下,再具体些,我们想要的内容在<a>标签下,其href属性显示了主播的房间号,和熊猫直播的基础URL https://www.panda.tv结合就可得出直播间的URL。<span>标签的“video-nickname”和“video-number”两个class则是我们需要的另外两个信息。HTML分析完后就可以编写代码了:

from bs4 import BeautifulSoup

import requests

base_url = 'https://www.panda.tv'

URL = 'https://www.panda.tv/cate/overwatch'

r = requests.get(URL)

r.encoding = "utf-8"

html = r.text

soup = BeautifulSoup(html, 'lxml')

info = soup.findAll('a', {"class": "video-list-item-wrap"})

print('主播人数:', len(info))

for streamer in info:

room = streamer['href']

nickname = streamer.find('span', {'class': 'video-nickname'})['title']

popularity = streamer.find('span', {'class': 'video-number'}).string

print(base_url + room, nickname, popularity)

有几个需要注意的点。网页的字符编码是“UTF-8”,所以需要用将requests的encoding属性设定为“UTF-8”,否则提取的HTML中中文会出现乱码。我在BeautifulSoup中使用了第三方的lxml解析器,与Python内置标准库相比,它的解析效率比较高。输出结果如下:

主播人数: 21

https://www.panda.tv/683603 Kr丶智妍 1735

https://www.panda.tv/1546645 昕星ya 575

https://www.panda.tv/1219209 世界杯官方直播间 13.1万

https://www.panda.tv/1192964 叶秋Decadent 2707

https://www.panda.tv/619462 熊猫丶刑天 1947

https://www.panda.tv/1817064 Ink2k 1355

https://www.panda.tv/469332 熊猫小金猪 1071

https://www.panda.tv/1153106 勺绿YA 746

https://www.panda.tv/1043517 亲亲小陆童鞋 650

https://www.panda.tv/1702668 困兽欸儿 177

https://www.panda.tv/291657 熊猫丶2喵 109

https://www.panda.tv/1859008 伤感蜘蛛侠 79

https://www.panda.tv/628904 放牛班的小黑 55

https://www.panda.tv/795973 熊猫丶三岁萌萌哒 36

https://www.panda.tv/734027 妙喵不可言 36

https://www.panda.tv/1990546 _最咸的鱼 21

https://www.panda.tv/2060694 Mujiplaygame 10

https://www.panda.tv/639004 超级大叔志 3

https://www.panda.tv/1243928 梦槡 3

https://www.panda.tv/2181623 伊森丶King 2

https://www.panda.tv/992169 YourSugarDaddy1 1

第二种方法是使用Scrapy。Scrapy是一个高效的爬虫框架,整合了爬取、处理数据、存储数据等多种功能。一般来说,爬取之前要创建一个新的Scrapy项目:scrapy startproject scrapyspider。然后在文件夹spiders下建立新文件编写代码:

class StreamersSpider(scrapy.Spider):

name = "streamers"

start_urls = [

'https://www.panda.tv/cate/overwatch',

]

def parse(self, response):

base = 'https://www.panda.tv'

for streamer in response.css('a.video-list-item-wrap'):

yield {

'url': base + streamer.css('a::attr("href")').extract_first(),

# 'name': streamer.css('div.video-info span.video-nickname::attr("title")').extract_first(),

'name': streamer.xpath('div[@class="video-info"]/span[@class="video-nickname"]/@title').extract_first(),

'pop': streamer.xpath('div[@class="video-info"]/span[@class="video-number"]/text()').extract_first(),

}

创建Spider必须继承scrapy.Spider类, 定义name、start_urls两个参数和parse方法,调用时,生成的Response对象会传递给该函数。提取数据需要用到选择器,Scrapy使用了基于XPath和CSS表达式机制。代码中对两种表达式都有所体现。另外,XPath也可由工具获取,如在Chrome浏览器中,可以右键元素,高亮这段HTML,再次右击,选择 ‘Copy XPath’。“url”代表直播间网址,“name”代表主播名,“pop”则代表人气。

Scrapy项目和普通Python项目运行时有点不同,本项目使用以下代码运行:scrapy crawl streamers -o res.json。“streamers”是代码中的name参数,res.json则保存爬取的数据,这里我在运行时,中文是以Unicode表示,需要在项目中的setting.py中添加代码:FEED_EXPORT_ENCODING = 'utf-8',方能正常显示。

[

{"url": "https://www.panda.tv/683603", "name": "Kr丶智妍", "pop": "1735"},

{"url": "https://www.panda.tv/1546645", "name": "昕星ya", "pop": "575"},

{"url": "https://www.panda.tv/1219209", "name": "世界杯官方直播间", "pop": "13.1万"},

{"url": "https://www.panda.tv/1192964", "name": "叶秋Decadent", "pop": "2707"},

{"url": "https://www.panda.tv/619462", "name": "熊猫丶刑天", "pop": "1947"},

{"url": "https://www.panda.tv/1817064", "name": "Ink2k", "pop": "1355"},

{"url": "https://www.panda.tv/469332", "name": "熊猫小金猪", "pop": "1071"},

{"url": "https://www.panda.tv/1153106", "name": "勺绿YA", "pop": "746"},

{"url": "https://www.panda.tv/1043517", "name": "亲亲小陆童鞋", "pop": "650"},

{"url": "https://www.panda.tv/1702668", "name": "困兽欸儿", "pop": "177"},

{"url": "https://www.panda.tv/291657", "name": "熊猫丶2喵", "pop": "109"},

{"url": "https://www.panda.tv/1859008", "name": "伤感蜘蛛侠", "pop": "79"},

{"url": "https://www.panda.tv/628904", "name": "放牛班的小黑", "pop": "55"},

{"url": "https://www.panda.tv/795973", "name": "熊猫丶三岁萌萌哒", "pop": "36"},

{"url": "https://www.panda.tv/734027", "name": "妙喵不可言", "pop": "36"},

{"url": "https://www.panda.tv/1990546", "name": "_最咸的鱼", "pop": "21"},

{"url": "https://www.panda.tv/2060694", "name": "Mujiplaygame", "pop": "10"},

{"url": "https://www.panda.tv/639004", "name": "超级大叔志", "pop": "3"},

{"url": "https://www.panda.tv/1243928", "name": "梦槡", "pop": "3"},

{"url": "https://www.panda.tv/2181623", "name": "伊森丶King", "pop": "2"},

{"url": "https://www.panda.tv/992169", "name": "YourSugarDaddy1", "pop": "1"}

]